OpenAI Co-Leader Yan Leike Also Resigns

Leike: “AI Safety Losing Ground to Profitable Products”

OpenAI, Post-Dismissal Exodus Leaves Anti-Alignment Faction Eradicated

,

,

Ilya Sutskever, OpenAI Co-Founder./OpenAI,

,

, ‘One of the co-founders of OpenAI and renowned as a ‘genius developer,’ Ilya Sutskever, OpenAI’s Chief Scientist, has decided to leave the company, confirming that the team he led, the ‘Superalignment’ Team, has been abruptly disbanded. This team was a kind of ‘safety team’ that researched methods to control AI to ensure that highly advanced AI in the future does not harm humanity. Following the expulsion crisis last year, with Sam Altman returning as OpenAI’s CEO, there have been analyses that he began to oust the ‘AI Doomsayers’ who had rejected him.’,

,

,

,

, ‘According to foreign media outlets such as WIRED on the 17th, members of the ‘Superalignment’ team, which was formed in July last year, are being reassigned to several teams after the team was disbanded. Previously, OpenAI had announced that it would invest 20% of its own computer performance over four years in this plan, which is described as an organization that researches technological innovations to smartly control and manage AI systems. However, after just one year, they decided to dismantle the non-profitable safety team immediately and focus on creating larger and faster new AI models and services using the company’s computer capabilities.’,

,

,

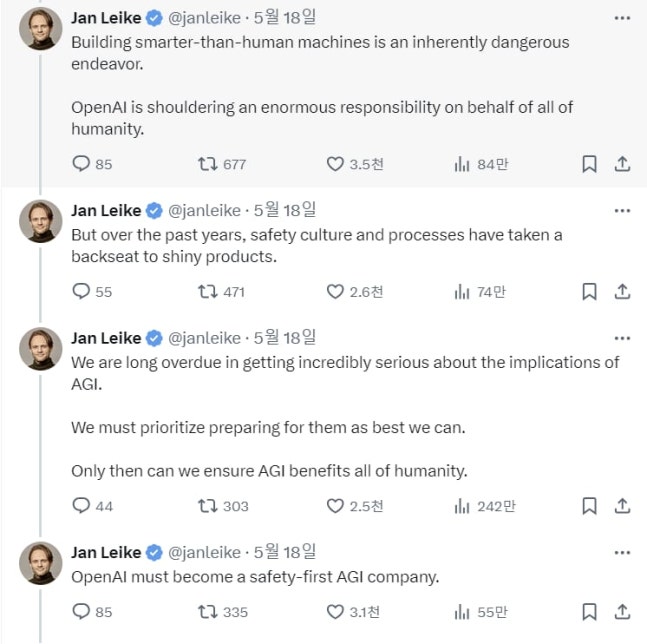

OpenAI Co-Leader of the Safety Team, Yan Leike, resigns and says in his statement, ‘AI safety at OpenAI is being left behind by profitable products.’/Yan Leike screenshot,

, ‘On the 18th, Jan Leike, who was the co-leader of this team, announced his resignation and stated in his statement, “Creating machines that are smarter than humans is inherently dangerous, and OpenAI has a grave responsibility to represent all of humanity” and pointed out, “But over the past few years, AI safety has fallen behind profitable products.” He further lamented that his team lost priority and was unable to secure computing and other resources to perform important tasks. Due to Altman’s return and the internal resources being focused on new product development and launch, the safety research team was effectively unable to conduct proper research.’,

,

, ‘Leike continued, “OpenAI should prioritize preparing for (the risks of AI) to the best of its ability and focus a much larger portion of the company’s capabilities on security, monitoring, safety, etc.,” and stated, “Only then can general artificial intelligence (AGI) benefit humanity.”’,

,

,

,

, ‘In the tech industry, there are concerns that “with Sutskever and Leike resigning one after the other, there is no one left within OpenAI to oppose Altman.” This comes as the board members and Sutskever, key figures who aligned with them, left the company sequentially just three months after the crisis.’,

,

, ‘After security-conscious executives stepped down, Greg Brockman, CEO of OpenAI, and Sam Altman stated in a lengthy statement published on X, “We want to explain some of the questions raised by their retirement.” In the statement, the two said, “We are demanding governance from global governments to better prepare for AGI and have been laying the groundwork necessary to deploy increasingly high-performance systems safely” and they mentioned, “We understand that we cannot imagine future scenarios and will continue safety research and collaborate with governments and many stakeholders.” OpenAI stated, “Instead of keeping the safety team as an independent entity, we will integrate it into the overall research to achieve these goals, and one of the co-founders, John Schulman, will lead AI alignment research.”’,

,

, ‘However, even with this statement, concerns are emerging in the tech industry that “OpenAI will transform even more commercially.” In fact, OpenAI recently unveiled the most advanced AI assistant features as of the 13th. It is a ‘next-generation AI assistant’ that can converse like a person, see and feel things like a user through a camera. A tech industry official stated, “Since the safety team has been dismantled, it has become difficult to know what OpenAI is doing regarding AI safety.”’,

,

,